Some questions I wanted to impirically answer with Elixir:

- Does the size of value of a Map or ETS object impact the time it takes to retrive a given record (specifically to find that key from others)?

- Does the number of keys in ETS or a Map impact the time it takes to retrive a given record?

So what did I find:

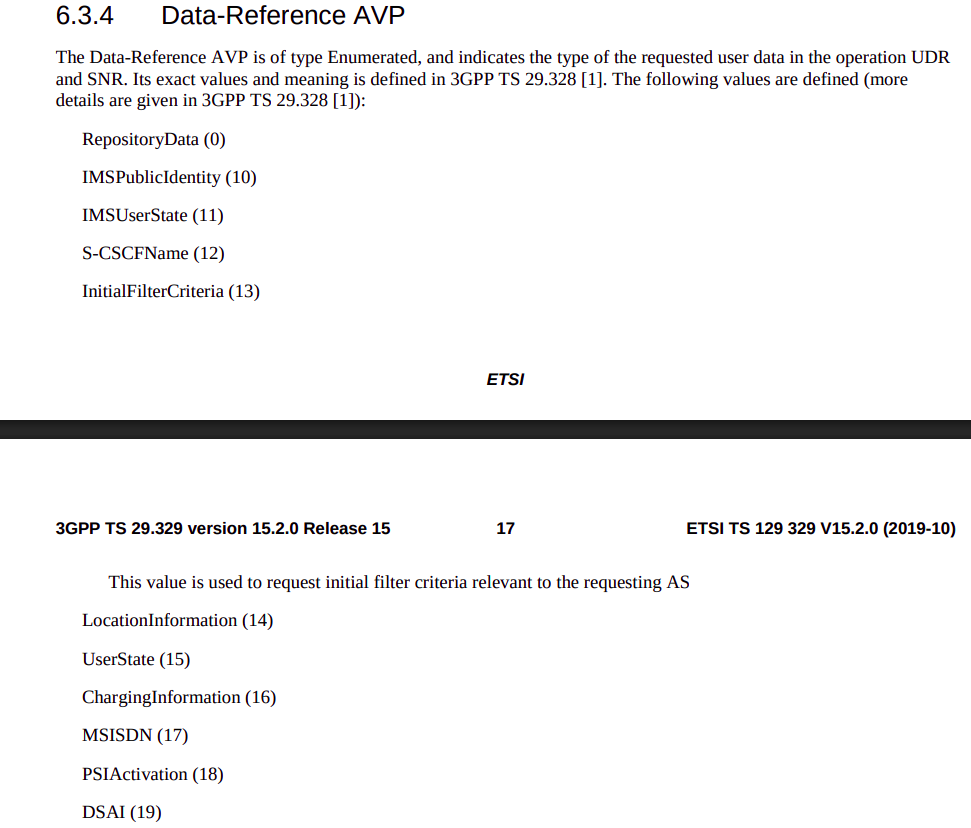

Question: Does the size of value of a Map or ETS object impact the time it takes to retrive a given record (specifically to find that key from others)?

Answer: In Maps, the impact of a 100x larger payload being written has a fairly minimal (4% impact) on read speed, but this is ~30% when using ETS.

Methodology used:

Compared 100000 and 100 byte payloads for read and write times.

- ETS Write is 24% slower with the larger payload (That’s to be expected, we’ve got more data to copy)

- ETS Read is 30% slower with the larger payload (Suggests that the size of the payload has a bearing on how quickly it can be indexed)

- Map Write was 13% slower with the larger payload and the Map read is only 4% slower

@reads 14_880_000

@distinct 100_000

@payload_bytes 100000

=== Results ===

Payload gen: 0 ms for 100000 bytes

ETS write: 223 ms (448,430.5 writes/sec)

ETS read: 15933 ms total (933,910.8 reads/sec)

Map write: 108 ms (925,925.9 writes/sec)

Map read: 2355 ms total (6,318,471.3 reads/sec)

@reads 14_880_000

@distinct 100_000

@payload_bytes 100

=== Results ===

Payload gen: 0 ms for 100 bytes

ETS write: 177 ms (564,971.8 writes/sec)

ETS read: 11149 ms total (1,334,648.8 reads/sec)

Map write: 125 ms (800,000.0 writes/sec)

Map read: 2470 ms total (6,024,291.5 reads/sec)

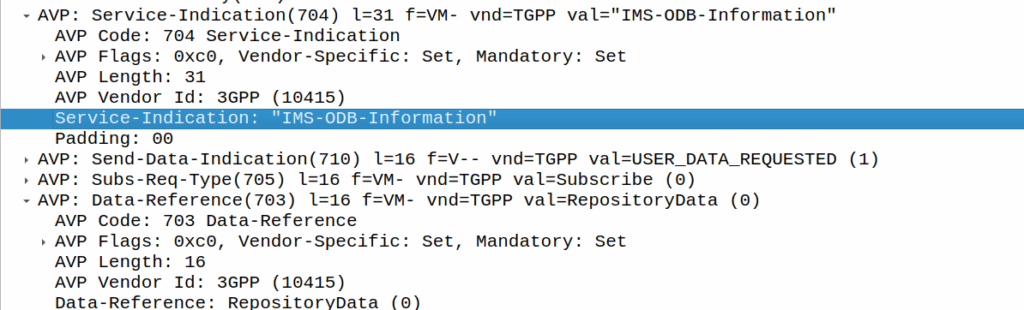

Question: Does the number of keys in ETS or a Map impact the time it takes to retrive a given record?

Answer: Writing 1000 records is of course super quick. That much should be obvious, but the retrive / read time is what I’m interested in here.

ETS: Yes, there is a ~40% performance hit in retriving a record from an ETS DB with 14m records than a DB with 1k records. This doesn’t appear to be linear, but there is an impact.

Map: Boy howdy, there’s a 10x increase in the time to get data when the database is larger than smaller. This is probably to do with how simple Maps are, compared to ETS which is definatley a better tool for the job when working with more records.

This led to an interesting realization, Map is faster at smaller sets of data (more keys, not larger values), but ETS is faster for larger data sets (again more keys, not coutning the values), and there’s a break-even point for ETS usage.

TL;DR – ETS – Number of keys has a mangable impact (40%) on retrive time. Map – Number of keys has a very real impact (10x) impact on read times.

Compared 1000 distinct keys vs 14.8m distinct keys.

@reads 14_880_000

@distinct 1000

@payload_bytes 1000

ETS write: 1 ms (1,000,000.0 writes/sec)

ETS read: 9769 ms total (1,523,185.6 reads/sec)

Map write: 9 ms (111,111.1 writes/sec)

Map read: 1292 ms total (11,517,027.9 reads/sec)

@reads 14_880_000

@distinct 14_880_000

@payload_bytes 1000

ETS write: 26176 ms (568,459.7 writes/sec)

ETS read: 16363 ms total (909,368.7 reads/sec)

Map write: 45503 ms (327,011.4 writes/sec)

Map read: 12926 ms total (1,151,168.2 reads/sec)